Anonymous traffic was once the concern of niche teams. Not anymore. Anonymous traffic is cropping up everywhere in companies without much thought. Development teams test from everywhere. Security teams analyze threat infrastructures without revealing themselves. Data teams scrape public data without distorting their datasets. All this traffic has questionable identifiers.

The issue is not whether anonymous traffic should be on a company network. It certainly should be. The issue is how to manage it without negative impacts on network tooling or even outages that no one saw coming.

Where Anonymous Traffic Should Exist in Actual Companies

Anonymous traffic should not be something that companies strive to “run.” It emerges out of necessity. A marketing team needs to preview their ads accurately. A QA team needs to understand the user experience in other geographies. A security team needs to analyze threat infrastructures while remaining undercover.

The problem is that these use cases emerge one at a time. They seem small. In isolation, they are manageable. Eventually, however, they snowball into an unmanageable pattern, and all of a sudden the network is overwhelmed with traffic it was never designed to handle in the first place. Suddenly there is latency, noisy logs, and alerts firing that make no sense to anyone.

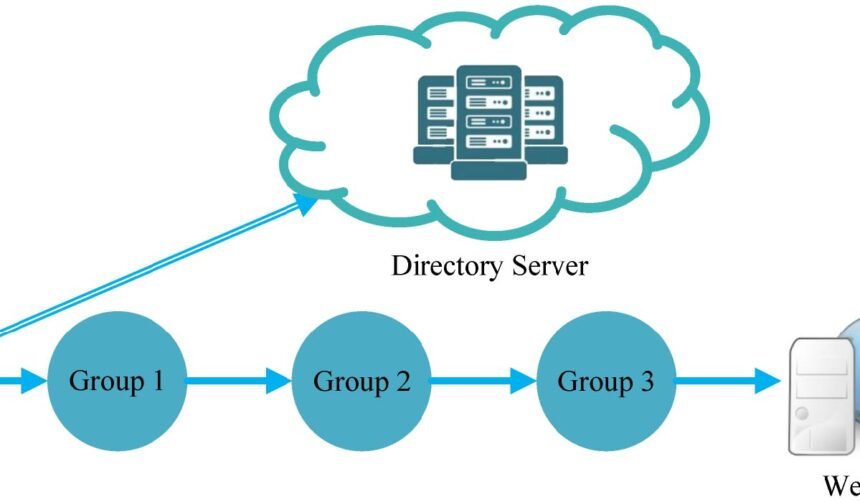

Routing Decisions are More Important Than Most Teams Imagine

One of the most overlooked technical aspects of managing anonymous traffic is how that traffic is routed on the network. This is not just a preference decision. Routing has implications for reliability, what can (and cannot) be made to work with that routing, and how much complexity that routing approach brings to maintenance.

Some teams route their anonymous traffic with vanilla HTTP proxies because they’re the simplest mechanism to deploy. Some route it through old-style VPNs designed to cater to remote teams rather than through heavy traffic from automation tasks. They work in the beginning. Eventually they always break under pressure when the workloads start increasing.

That’s why most teams are best served by routing their anonymous traffic through something like a socks5 proxy server. A socks5 proxy server lives lower in the stack and supports more protocols, which means teams have fewer points of friction with the modern applications they’d like to use for this purpose. When a traffic routing mechanism does not need to rewrite or analyze traffic at every step, it decreases the number of things that can break for unexpected reasons.

Choosing the Right Routing Mechanism is Task-Based

Choosing the right routing mechanism is not about being flashy or having the latest technology stack. Choosing the best routing mechanism for the job at hand is about choosing the right tool for the job.

Some people set up anonymous traffic routing in their companies with vanilla HTTP proxies because they’re the simplest option. Others use outdated VPNs that were never designed to handle this sort of workload in the first place.

These both work for a while. Eventually they always fail when the workload intensifies.

The Problems Caused by Anonymous Traffic Issues are Modular

Performance problems related to anonymous traffic do not emerge in ways that are easy to identify. They emerge as a set of challenges. A single request to a service might take longer than expected to fulfill. A single service might need to be retried before it succeeds. Logs get filled earlier than expected.

In time, all these seemingly minor performance challenges add up to one giant mountain of performance failures. Bandwidth usage patterns might not be evenly distributed. Connection pools might fill unexpectedly. Applications might not cooperate when they hit unexpected load patterns.

Trouble-free companies that manage this aspect of anonymous traffic administration well do not look for maximum performance. They search for consistent performance.

Context Is Important to Security

Anonymous traffic can be suspicious from an external point of view, which is half the goal of using anonymous traffic in the first place. The internal view should never be opaque or blurry, however.

Proper tagging goes a long way. Traffic should be tagged with its purpose, the system that generated it, and who owns it. When unexpected activity shows up on the company’s security monitoring tools, the security team should be able to answer one question: is this expected behavior or should someone be investigating this right now?

Without this context, automated security tools get triggered for legitimate traffic and waste time getting themselves out of a mess while they should be dealing with threats.

External Systems Can Block Traffic Too

It’s easy to focus only on internal systems when discussing the challenges companies face when managing anonymous traffic. Remember: external systems monitor traffic behavior quite aggressively too. Requests that show unrealistic patterns, bursts of unexpected size, and headers that make no sense are picked up quickly.

The companies that find themselves dealing with external rate limiting and blocks generally do so under the delusion that they are being unfairly targeted for blocks and rate limits when they are simply flooding external systems with requests that do not appear like normal behavior.

Slowing things down, spreading requests out instead of overwhelming services, and creating traffic patterns that do not appear anomalous go a long way to avoid blocks and rate limits.

Beta testing also helps by ramping usage gradually instead of bombarding external monitoring services with requests.

Downtime Is Likely to Happen After a Calm Period

A lot of downtime on company networks related to anonymous traffic occurs when all is well. The proverbial party has stopped, and the dust has settled. No one is looking at monitoring dashboards.

New tasks have been added without revisiting work limits in light of changes. No one thought to see whether existing limits on how many tasks a team can perform still make sense in light of their new workloads. Workload limits have been changed without being updated in light of changing usage patterns.

Yet it’s no surprise then that something suddenly goes wrong at some point. A spike occurs out of nowhere. A reverse proxy pool runs out of entries. Processes get stuck when they encounter issues no one anticipated. Revivals take longer than expected because documentation is out of date.

Companies that avoid the cycle of issue management caused by abandoning monitoring practices return to limits on occasion—even when everything works smoothly. They revisit decisions about routing mechanisms and operation assumptions about limits. They also check whether security rules still apply.

Keeping Anonymous Traffic Under Control

Anonymous traffic is not going away. Automated processes are becoming more complex, and companies are using them for more use cases than ever before.

The companies that experience anonymous traffic like it is a natural feature of their networks, rather than something abnormal that needs specific attention.

The correct routing decisions, a few effective segmentation techniques, reliable performance metrics, and tag management can keep anonymous traffic under control rather than making someone anxious about its presence on the network where it behaves like any other reasonable workload.

For More Information Visit Fourmagazine