When people talk about making music with AI, the conversation often drifts into extremes: either it is “instant genius,” or it is “soulless automation.” My experience landed somewhere more practical. Using an AI Song Generator felt less like outsourcing creativity and more like running quick experiments—almost like product testing. You propose a hypothesis (“this should feel cinematic and hopeful at ~120 bpm”), generate a draft, listen for what works, adjust one variable, and repeat. The value is not that every output is perfect. The value is that you can validate direction before you commit time, money, or emotional energy.

The Real Job: Reducing Uncertainty Early

Most creative frustration is uncertainty in disguise:

- “Is this the right vibe for the intro?”

- “Does this chorus lift enough?”

- “Will these lyrics actually sing well?”

- “Is the rhythm too busy for voiceover?”

Traditional workflows answer those questions, but often slowly. A generator answers them faster, even if imperfectly.

PAS: Why people abandon good ideas

- Problem: You cannot hear the idea soon enough to know if it is worth building.

- Agitation: You spend time setting up instruments and patterns, only to discover the direction was wrong.

- Solution: Generate early drafts quickly so you can decide what is worth producing properly.

How It Works (Without Pretending It Is Magic)

At a high level, you provide a text description or lyrics, and the system outputs an audio draft by assembling musical components—melodic contour, harmonic movement, rhythm, and arrangement choices—into a coherent track.

In my tests, the quality of the result correlated strongly with the quality of the “brief.” When I wrote prompts like a creative director—tempo range, instrumentation, structure cues—the output was more stable.

Two Distinct Modes: “Hypothesis Testing” vs “Lyric Fitting”

1) Description-to-Music = Hypothesis Testing

Use this when you have intent but not words:

- background music for content,

- an opening hook concept,

- a brand mood draft,

- a “scene” soundtrack.

My observation:

This mode is excellent for exploring *directional variants*. If you generate 4–6 drafts with controlled prompt changes, you can identify the best harmonic color and groove quickly.

2) Lyrics-to-Song = Lyric Fitting

Use this when lyrics exist and you want to hear them “live.”

My observation:

Lyric meter matters. Even strong lyrical writing can feel awkward when sung if line lengths vary dramatically. Light rewrites (shortening lines, clarifying cadence) often improved the outcome more than switching genres.

A New Comparison Angle: Where the “Control” Actually Lives

The common misconception is that control equals knobs. In this workflow, control often lives in language.

- With a DAW, you control every note by editing.

- With a generator, you control outcomes by constraint design: what you specify, what you exclude, and how you structure the ask.

When I started treating prompts as “specifications,” I got fewer surprises.

Comparison Table: Idea Validation vs Craft Execution

| What You Need Right Now | AI Song Generator | DAW Production | Producer/Composer | Stock Music |

| Validate a mood quickly | Strong | Medium (time cost) | Medium (briefing cost) | Medium (search cost) |

| Generate multiple directions in one sitting | Strong | Weak-to-medium | Weak | Weak |

| Detailed arrangement control | Limited | Strong | Strong | None |

| Reliable repeatability | Medium | Strong | Strong | Strong |

| Best when | Early exploration | Refinement and finishing | High-stakes interpretation | “Safe filler” needs |

| Typical risk | Inconsistent drafts | Time-consuming setup | Higher cost | Generic feel |

What I Found Most Useful: The “Single-Variable Iteration” Method

The fastest path to better drafts was not endless regeneration. It was disciplined iteration:

- Keep the prompt constant; generate 2–3 outputs.

- Choose the best one; write down one flaw.

- Change only one thing in the prompt.

- Generate again and compare.

Examples of single-variable changes

- “same vibe, but 10 bpm slower”

- “reduce percussion complexity”

- “make the chorus lift more obvious”

- “switch main instrument from synth to guitar”

- “more space for voiceover; less melodic density”

This method made results feel more predictable, because I could see cause and effect.

What Improves Results (My Practical Prompt Checklist)

1) Give an “audience context”

- “for a travel vlog montage”

- “for a product demo with voiceover”

- “for a cinematic teaser intro”

2) Specify “energy curve”

- “restrained verse, bigger chorus”

- “gradual build, no sudden drops”

- “steady minimal groove throughout”

3) Choose a tight instrument palette

- “warm bass + soft drums + clean guitar”

- “pads + sparse percussion + subtle arpeggio”

4) Include an “avoid list”

This reduces accidental stylistic drift:

- “avoid harsh distortion”

- “avoid busy hi-hats”

- “avoid abrupt key changes”

- “avoid overly bright lead

Limitations Worth Knowing (So You Do Not Over-Promise It to Yourself)

1) It is not deterministic

Two generations from the same prompt can differ. That is helpful for exploration, but less ideal when you need strict repeatability.

2) Vocals are the most variable element

Instrumentals often stabilize sooner. With vocals, intelligibility and phrasing can fluctuate, especially with complex lyric meter.

3) You may need multiple generations

If your expectation is “one click and done,” you will feel friction. If your expectation is “drafts,” it becomes a workable pipeline.

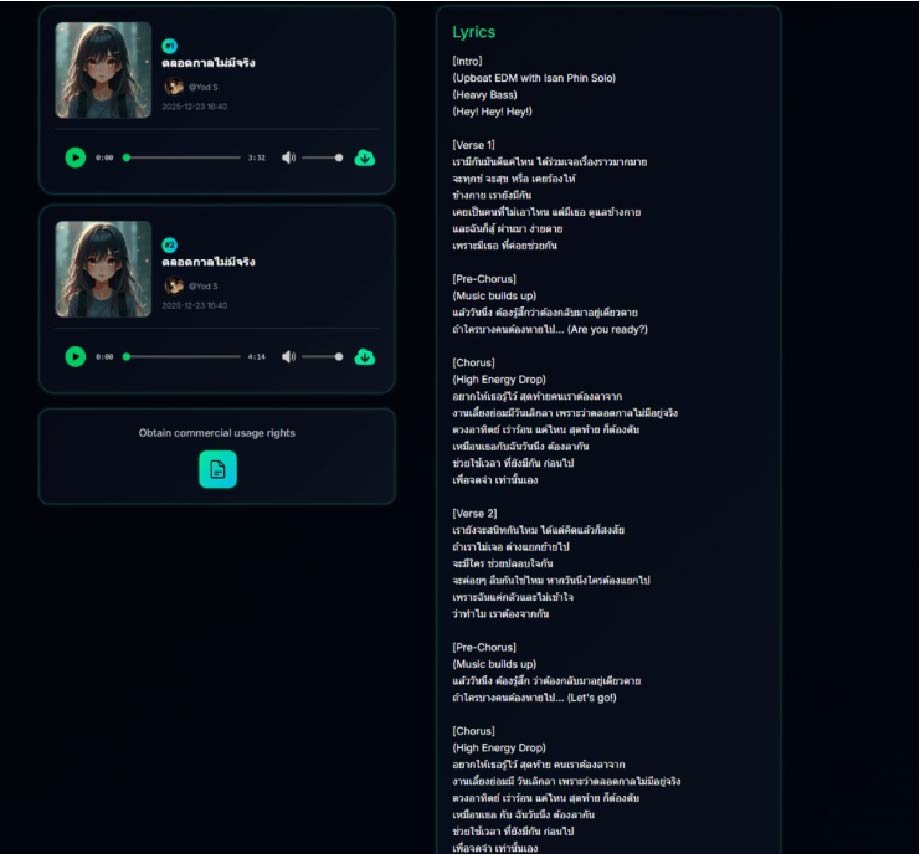

4) Commercial use and licensing deserve attention

If you plan to publish or monetize, read the platform’s terms and plan entitlements carefully. Marketing phrases like “royalty-free” are not the same as a legal permission boundary; details matter in real-world distribution.

A Measured External Reference (If You Want Context, Not Hype)

If you want a neutral perspective on how generative AI is evolving across creative applications, broader reporting such as Stanford’s AI Index can help frame the trend line without attaching credibility to any single platform.

Who Benefits Most From This Approach

Strong fit

- creators who need a reliable “first draft engine”

- lyric writers who want to audition phrasing quickly

- teams building mood boards for campaigns

- indie developers prototyping game or app soundscapes

Weaker fit

- production workflows that require surgical control

- projects where sound design is the product itself

- releases that must match a highly specific reference track

Closing: Treat It Like a Lab, Not a Lottery

In my testing, AI Song Maker became most valuable when I stopped expecting a miracle and started running experiments. The generator gives you speed, variance, and early feedback. You bring intent, taste, and selection. If you do that, an AI song generator becomes a practical part of a modern workflow—not because it replaces craft, but because it helps you reach the point where craft is worth applying.