Introduction

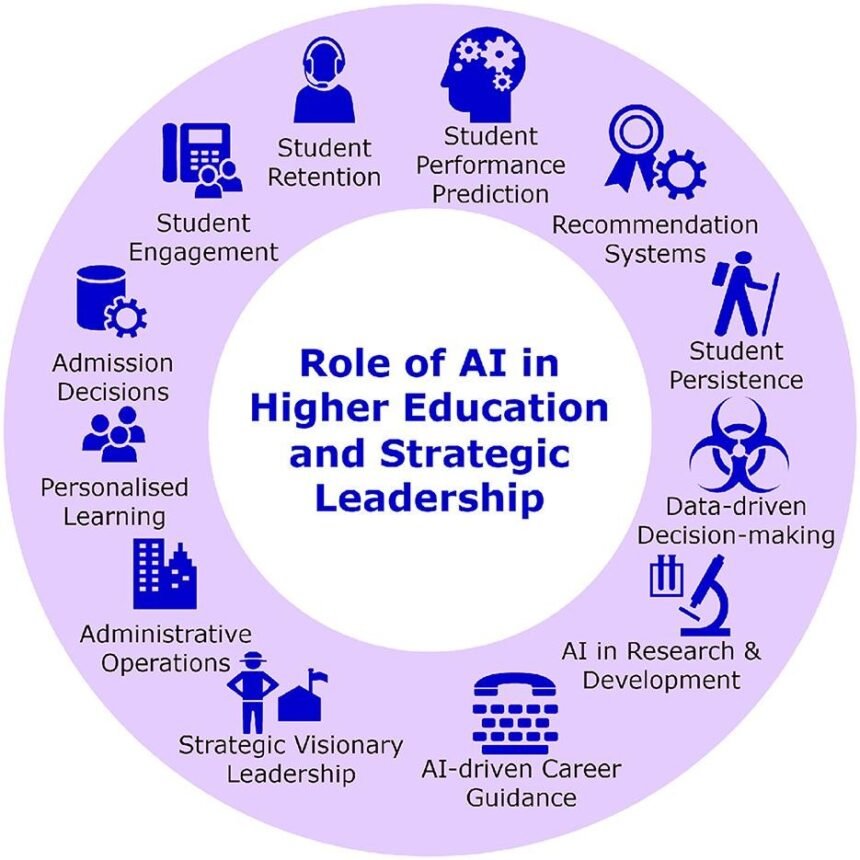

Artificial Intelligence (AI) is quickly becoming a core part of higher education. From helping admissions teams review applications faster to guiding students with instant chat support, AI is no longer a future idea—it’s already here. Universities across the world are experimenting with AI to save time, personalize learning, and improve student services.

But with great opportunities come serious questions. How do we make sure AI is fair? Who is responsible when mistakes happen? What rules should guide the use of student data? These are not small concerns, and that is why policy and regulation matter.

This guide explains, in simple terms, the key areas universities should think about when adopting AI. It is not about slowing down innovation but about making sure technology works for students, not against them.

Why AI Needs Regulation in Universities

AI tools can bring enormous value to education, but without oversight, they can also create new risks. Some of the main concerns include:

- Bias in admissions decisions: If AI is trained on biased data, it may favor or reject students unfairly. For example, if historical admissions data shows preference for certain groups, the AI may repeat those patterns.

- Privacy issues: AI needs large amounts of data to work well. If this data includes personal or sensitive student details, misuse or leaks can harm trust.

- Over-reliance on automation: While AI can save time, it should not replace human judgment in areas like admissions interviews, counseling, or grading complex assignments.

- Student confidence: If students feel decisions are being made by a “black box” with no explanation, they may lose trust in the institution.

Clear policies help universities find the right balance between innovation and responsibility.

Key Areas of Policy to Consider

When adopting AI, universities should focus on a few key policy areas that ensure technology is used fairly, safely, and responsibly.

1. Data Privacy and Security

Student data is one of the most valuable assets universities handle. From grades and attendance to financial information, AI systems need access to large datasets to function. Institutions should:

- Build strong data protection systems.

- Seek explicit student consent before collecting personal data.

- Follow local and international privacy laws, such as GDPR in Europe or FERPA in the United States.

- Limit how long data is stored and who can access it.

Example: A university using AI chatbots to answer student queries must make sure those conversations are encrypted and not shared with outside vendors without permission.

2. Transparency in Admissions and Grading

If AI is used in critical areas like admissions or grading, students and applicants have the right to know how decisions are made. Transparency can include:

- Explaining how the AI tool supports decisions (e.g., “the system checks transcripts for GPA calculations”).

- Making it clear where humans are still in charge of final decisions.

- Creating an appeals process so students can challenge outcomes they believe are unfair.

Example: If AI helps screen applications, the admissions team should clarify that the final acceptance is still reviewed by staff, not by the system alone.

3. Ethical Use of AI

Universities should define when and how AI can be used. Some guiding questions include:

- Does this AI tool improve fairness and efficiency?

- Does it replace tasks that require human empathy, like counseling?

- Can it unintentionally disadvantage certain student groups?

AI should be a supporting tool, not the main decision-maker in areas that affect a student’s future.

4. Equal Access for All Students

Not all students have equal digital access or skills. AI policies should ensure that new tools do not widen the gap between privileged and disadvantaged groups.

- Universities should test AI tools with diverse student populations.

- Accessibility features must be built in (voice options, multiple languages, screen reader compatibility).

- Support systems should exist for students who struggle with digital platforms.

Example: If a university uses AI for scheduling classes, the system should not disadvantage students with disabilities or those who rely on part-time jobs.

Practical Steps for Universities

So how can universities move from ideas to action? Here are some practical steps:

- Create an AI policy framework – Write down clear guidelines that cover admissions, grading, student engagement, and administrative tasks.

- Set up an ethics board – A small team of faculty, IT staff, and student representatives can review every new AI tool before it is adopted.

- Provide faculty training – Teachers should understand how AI tools work so they can use them confidently and responsibly.

- Test for bias regularly – Institutions should review outcomes (e.g., admissions results, grading fairness) to catch hidden biases.

- Involve students – Students should have a voice in how AI is used. Collect feedback through surveys, forums, or pilot programs.

These steps help ensure AI adoption is both innovative and ethical.

The Role of Engagement Tools

AI in higher education is not limited to admissions and grading. It also plays a growing role in how students connect with their university experience. Institutions now use advanced tools for student engagement to track participation, send reminders, and provide real-time support.

For example:

- Smart apps can remind students about assignment deadlines.

- AI chatbots can answer financial aid questions instantly.

- Personalized dashboards can show each student their progress and suggest resources.

While these tools improve motivation and success, they also need proper oversight. Policies should ensure these tools respect student privacy and do not overwhelm learners with unnecessary notifications.

Looking Ahead

AI adoption in higher education will only grow in the coming years. Governments are also starting to pay attention. The European Union has proposed rules for “high-risk” AI systems, which may include educational tools. Countries like the United States and Canada are also discussing frameworks for responsible AI.

For universities, this means policies must be flexible and forward-looking. What works today may need updates tomorrow as technology and laws change. A proactive approach—rather than waiting for government mandates—will allow institutions to lead rather than follow.

Conclusion

Artificial Intelligence offers higher education powerful new possibilities, from smarter admissions processes to personalized learning experiences. But power without responsibility can create serious risks.

By focusing on privacy, transparency, ethics, and equal access, universities can ensure AI serves students in the best way possible. The goal is not to replace human judgment but to make it stronger with the support of technology.

A thoughtful, practical policy framework ensures AI remains a force for good—helping institutions improve efficiency while protecting fairness and trust. In the end, universities that embrace both innovation and accountability will be the ones that succeed in shaping the future of education.